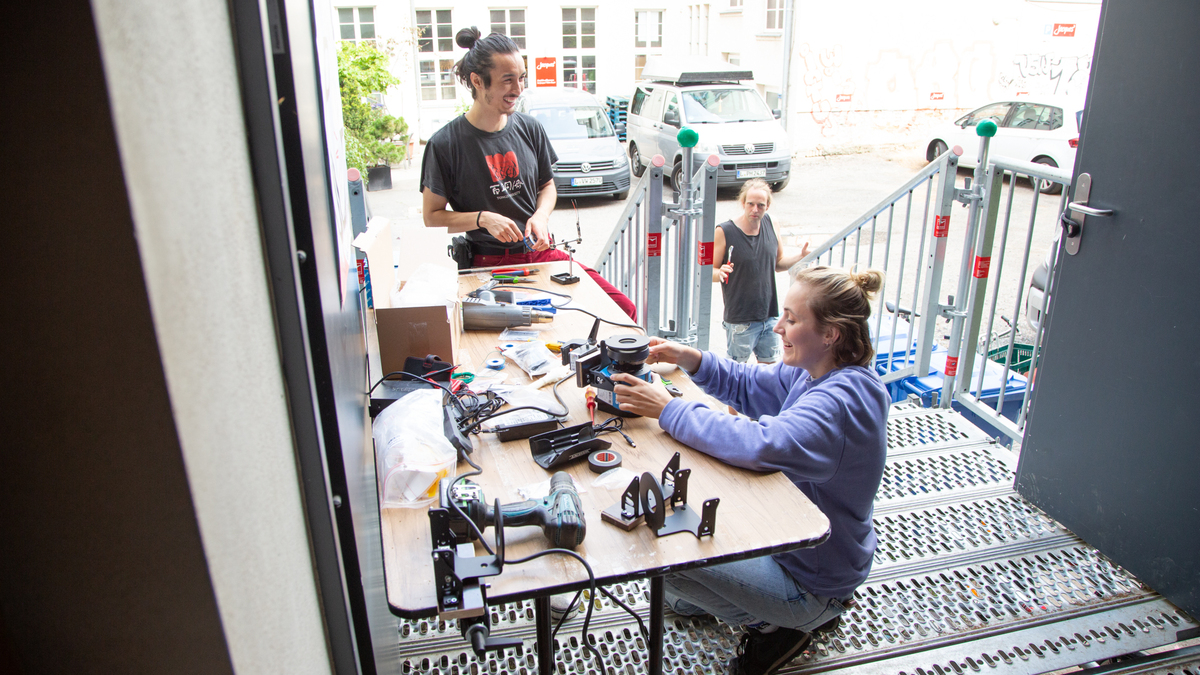

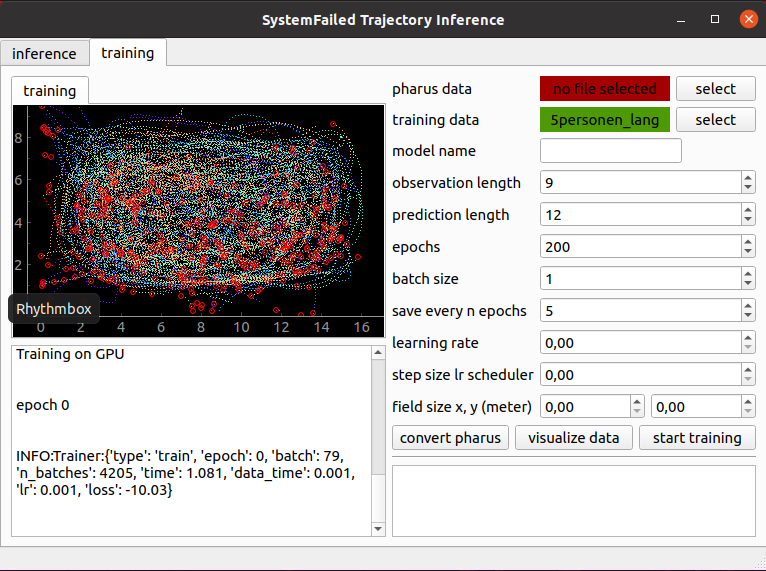

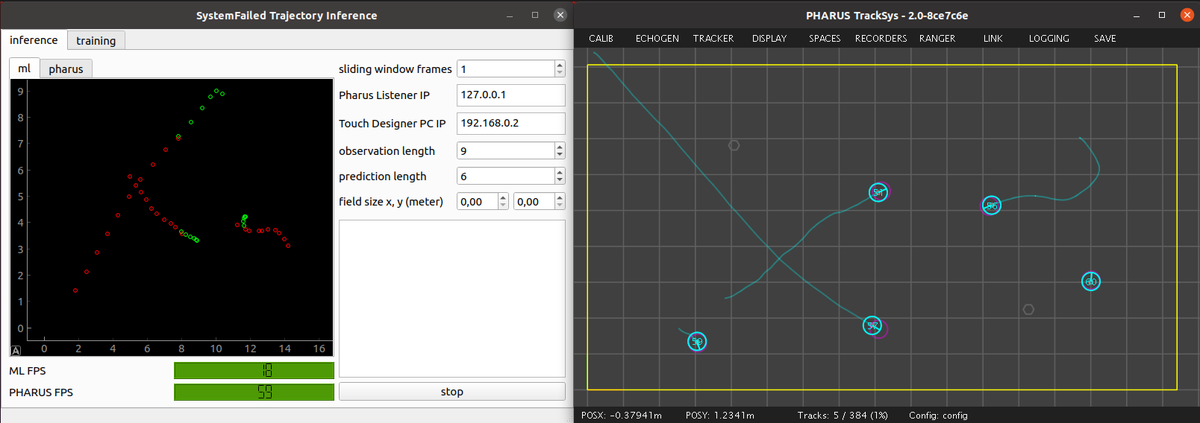

CODING - The nerds develop the machine learning system, the control software for lights and projections, and the geometric calculations to evaluate movements.

The machine learning is based on the Trajectory Forecasting Framework (TrajNet++). The algorithm attempts to predict the movement behavior of people in groups. The software development can be followed onHub.